Gemma 3 Technical Deep Dive - Architecture, Performance, and Implications

Google DeepMind’s release of the Gemma 3 (missing reference) technical report marks a significant iteration in their family of open-weight models. Building upon Gemma 1 and 2, Gemma 3 introduces multimodality, enhanced multilingual capabilities, and significantly longer context windows, while explicitly targeting efficiency suitable for consumer-grade hardware – a crucial consideration in the democratization of large model access.

1. Introduction

Gemma 3 extends the Gemma family with models ranging from 1B to 27B parameters. Key advancements presented include:

- Multimodality: Integration of vision understanding via a tailored, frozen SigLIP encoder, processing images as sequences of soft tokens.

- Long Context: Support for context windows of at least 128K tokens (32K for the 1B model), enabled by architectural modifications.

- Architectural Efficiency: A novel interleaved local/global attention mechanism specifically designed to mitigate the KV cache memory bottleneck inherent in long-context inference.

- Enhanced Performance: Superior results compared to Gemma 2, particularly in instruction-tuned (IT) variants. This is attributed to knowledge distillation and a refined post-training recipe incorporating advanced RL techniques.

- Improved Multilinguality: Better representation and handling of non-English languages achieved through adjustments in the pre-training data mixture and the adoption of the Gemini 2.0 tokenizer.

This analysis will dissect these aspects, examining the architectural rationale, training methodologies, evaluation paradigms, and the inherent trade-offs and limitations.

2. Model Architecture

While retaining the foundational decoder-only Transformer architecture, Gemma 3 incorporates several critical modifications compared to its predecessors, primarily focused on enabling long context efficiently and integrating vision.

KV Cache Management: Interleaved Local/Global Attention

A primary bottleneck for deploying long-context models is the KV cache size, which scales linearly with sequence length and number of layers, quickly exceeding typical device memory. Gemma 3 tackles this with a hybrid attention strategy:

- 5:1 Interleaving: The architecture alternates between local (sliding window) self-attention and global self-attention layers. Specifically, it employs a repeating pattern of 5 local layers followed by 1 global layer.

- Short Local Span: Local attention layers operate with a constrained sliding window of only 1024 tokens. This significantly limits the contribution of these layers to the overall KV cache size.

- Efficient Long Context Processing: Consequently, only the global attention layers (representing 1/6th of the total layers) need to store Keys and Values for the entire 128K context window. This architectural choice drastically reduces the KV cache footprint compared to a model where all layers attend globally, striking a balance between capturing long-range dependencies and maintaining inference feasibility on resource-constrained hardware.

sequenceDiagram

participant I as Input Sequence (up to 128K)

participant L5 as 5x Local Attention Layers

participant G1 as 1x Global Attention Layer

participant O as Output Embeddings

I->>L5: Process with 1024-token sliding window

Note right of L5: KV cache contribution per layer<br/>scales with window size (1024)

L5->>G1: Process full context (128K)

Note right of G1: KV cache contribution per layer<br/>scales with full context length (128K)

loop Multiple Blocks

G1->>L5: Output feeds into next block

L5->>G1: ...

end

G1->>O: Final Output

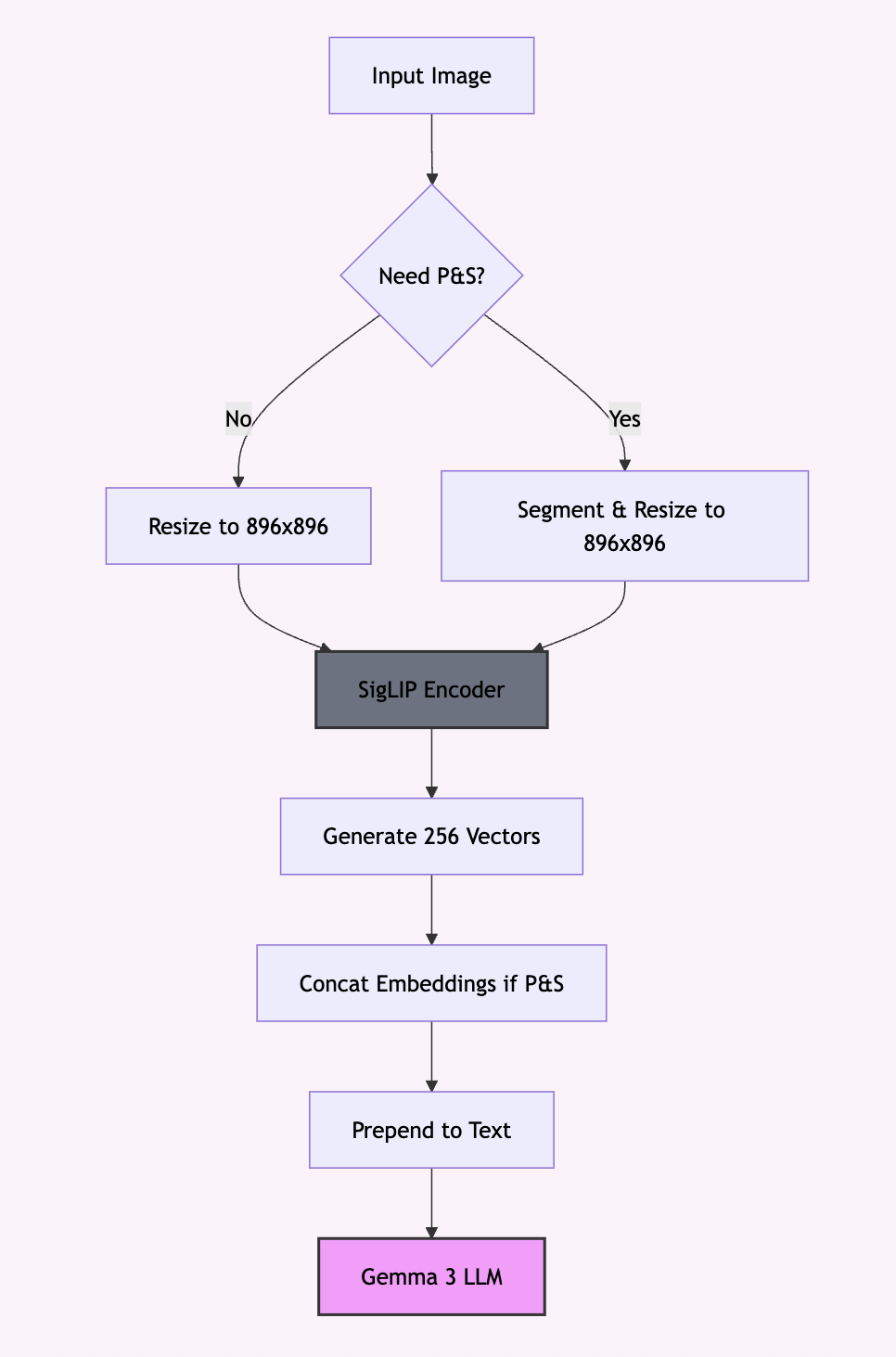

Vision Integration: SigLIP and Pan & Scan (P&S)

Gemma 3 integrates visual processing using:

- Vision Encoder: A 400M parameter variant of the SigLIP Vision Transformer (Zhai et al., 2023). Notably, this encoder is frozen during the language model’s training. This simplifies training and reduces computational cost but potentially limits the synergy between visual and textual feature extraction compared to end-to-end training.

- Token Condensation: Embeddings from the SigLIP encoder are condensed into a fixed-length sequence of 256 vectors (“soft tokens”). These visual tokens are prepended to the text token sequence, serving as the language model’s input representation of the image.

- Pan & Scan (P&S): The SigLIP encoder operates at a fixed resolution (896x896). To handle arbitrary image resolutions and aspect ratios effectively, particularly for tasks involving reading text or resolving fine details, an inference-time P&S strategy (inspired by (Liu et al., 2023)) is employed. This adaptively segments the input image into potentially overlapping crops, resizes each to the target 896x896 resolution, encodes them individually, and concatenates the resulting 256-token sequences (up to a predefined maximum number of crops). While enhancing capability for high-resolution or non-square images, this adds computational overhead during inference.

Other Architectural Details

- Long Context Enablers: To effectively utilize the extended context, the Rotary Position Embeddings (RoPE) base frequency for the global self-attention layers is increased significantly from 10k (used in Gemma 2 and Gemma 3’s local layers) to 1M. This adaptation, drawing inspiration from positional interpolation techniques (Chen et al., 2023), helps the model generalize positional information over much longer sequences.

- Normalization & Attention Refinements: The models utilize Grouped-Query Attention (GQA) for efficiency, employ RMSNorm for layer normalization (both pre-norm and post-norm), and notably replace the soft-capping activation from Gemma 2 with QK-norm. This QK-normalization (inspired by recent works like (Dehghani et al., 2023), (Wortsman et al., 2023), (Team, 2024)) likely contributes to improved training stability or performance scaling.

- Tokenizer: The adoption of the Gemini 2.0 SentencePiece tokenizer (262k vocabulary) is a key change from earlier Gemma versions. Its design aims for better handling of multilingual text, digits, and whitespace, which is crucial for improved performance across diverse languages and domains like code.

3. Training and Data Strategy

The training process involves distinct pre-training and instruction fine-tuning phases, leveraging large datasets and sophisticated optimization techniques.

Pre-training

- Dataset Scale: Models were trained on substantial token budgets ranging from 2T (1B model) to 14T (27B model). This slight increase compared to Gemma 2 accommodates the new multimodal and expanded multilingual data components.

- Data Composition: The pre-training mixture comprises web documents, code, mathematics, and dialogue data. Emphasis was placed on increasing the proportion of multilingual data (both monolingual corpora across various languages and parallel data) and incorporating image-text pairs for multimodal grounding. Strategies inspired by (Chung et al., 2023) were used to manage language imbalance during sampling.

- Data Hygiene: Rigorous filtering was applied to mitigate risks associated with harmful content, remove personally identifiable information (PII), decontaminate evaluation benchmark data from the training set (a critical step for reliable evaluation), and apply quality heuristics, potentially re-weighting data based on quality metrics (Sachdeva et al., 2024).

- Knowledge Distillation: Pre-training incorporates knowledge distillation (Hinton et al., 2015) from larger, unspecified teacher models. The student model learns via cross-entropy loss, but instead of using the standard one-hot target distribution, it uses a softened distribution derived from sampling 256 logits based on the teacher’s output probabilities for each token. This guides the student towards the teacher’s internal representations.

Instruction Fine-tuning (Post-training)

This phase appears critical to Gemma 3’s strong performance on user-oriented tasks, transforming the base models into capable instruction-following agents.

- Refined Recipe: The report mentions a “novel post-training recipe,” although specific details remain limited. It clearly involves an enhanced form of knowledge distillation, this time using a large instruction-tuned teacher model.

- Reinforcement Learning (RL): Advanced RL techniques are employed, likely extending methods like BOND (Sessa et al., 2024), WARM (Ramé et al., 2024), and WARP (Ramé et al., 2024).

- Multi-Objective Optimization: RL training utilized a diverse set of reward functions targeting helpfulness, mathematical reasoning, coding proficiency, general reasoning, instruction adherence, multilingual fluency, and safety (minimizing harmfulness). These rewards leverage a combination of human feedback (RLHF), feedback from automated systems like code execution environments (Gehring et al., 2024), and ground-truth outcomes for tasks like mathematics ((DeepSeek-AI, 2024), (Lambert et al., 2024)).

- Targeted Filtering: Additional data filtering specific to the post-training phase was implemented to enhance factuality, encourage attribution, reduce hallucinations, and steer the model away from unsafe or undesirable outputs.

Quantization Aware Training (QAT)

Recognizing the need for efficient deployment, Gemma 3 models undergo QAT (Jacob et al., 2018). This involves further fine-tuning the models specifically for lower-precision inference (targeting per-channel int4, per-block int4, and switched fp8 formats popular in frameworks like llama.cpp). Instead of quantizing post-training, QAT adapts the weights during training, using the full-precision model’s output probabilities as targets, thereby minimizing the performance degradation often associated with quantization.

| Model | Weights (bf16) | Weights (int4) | W + KV Cache (int4, 32k ctx, 8b KV) | W + KV Cache (SFP8, 32k ctx, 8b KV) |

|---|---|---|---|---|

| 1B | 2.0 GB | 0.5 GB | ~1.6 GB | ~1.9 GB |

| 4B | 8.0 GB | 2.6 GB | ~7.6 GB | ~9.1 GB |

| 12B | 24.0 GB | 6.6 GB | ~22.0 GB | ~27.3 GB |

| 27B | 54.0 GB | 14.1 GB | ~34.0 GB | ~46.1 GB |

Table: Approximate Memory Footprints (derived from Table 3 in the report). Note the significant contribution of the KV cache even when quantized.

4. Performance Evaluation

Gemma 3 demonstrates substantial improvements over its predecessor, Gemma 2, and achieves performance levels that are highly competitive within the open model landscape, even compared to significantly larger models.

General Capabilities (Instruction-Tuned Models)

- LMSYS Chatbot Arena: The Gemma-3-27B-IT model achieved a preliminary Elo score of 1338 (as of March 8, 2025). This positions it strongly among top-performing open models in blind pairwise human evaluations, notably surpassing Gemma-2-27B-IT (1220 Elo) and even larger models like Llama-3.1-405B-Instruct (1269 Elo) at that time. This highlights the effectiveness of the post-training recipe.

- Standard Benchmarks (Table 6): Across various academic benchmarks, Gemma 3 models show consistent gains. A key finding is that the Gemma3-4B-IT model frequently matches or outperforms the much larger Gemma2-27B-IT on challenging tasks like MATH, HiddenMath, and MMLU-Lite. The flagship Gemma3-27B-IT closes the gap significantly with the powerful, proprietary Gemini-1.5-Pro on several benchmarks, particularly MATH (89.0 vs. 91.8 for Gemini Pro) and MMLU-Pro (67.5 vs. 75.8), while also showing strong coding (LiveCodeBench) and reasoning performance.

Here’s a comparative visualization for selected benchmarks based on Table 6 data:

{

"data": [

{

"x": ["MMLU-Pro", "LiveCodeBench", "MATH", "HiddenMath", "MMMU (val)", "Global MMLU-Lite"],

"y": [56.9, 20.4, 55.6, 14.8, null, 68.6],

"name": "Gemma 2 27B IT",

"type": "bar"

},

{

"x": ["MMLU-Pro", "LiveCodeBench", "MATH", "HiddenMath", "MMMU (val)", "Global MMLU-Lite"],

"y": [67.5, 29.7, 89.0, 60.3, 64.9, 75.1],

"name": "Gemma 3 27B IT",

"type": "bar"

}

],

"layout": {

"title": {

"text": "Gemma 3 27B IT vs Gemma 2 27B IT Performance"

},

"yaxis": {

"title": "Score (%)"

},

"barmode": "group",

"legend": {"yanchor":"top", "y":0.99, "xanchor":"left", "x":0.01}

}

}

Vision and Multimodal Performance

- Gemma 3 achieves robust performance on diverse vision-language benchmarks (Tables 11 & 16), demonstrating proficiency in tasks requiring OCR (DocVQA, TextVQA), understanding of structured information (ChartQA), and general visual question answering (MMMU, VQAv2).

- The effectiveness of the Pan & Scan (P&S) mechanism is empirically validated (Table 8), providing substantial score increases on benchmarks sensitive to image resolution and text legibility, such as DocVQA (+8.2 points for 4B, +4.8 for 27B) and InfoVQA (+12.9 for 4B, +17.0 for 27B). This confirms P&S’s role in overcoming the fixed-resolution limitation of the vision encoder.

- Initial results on video benchmarks (Table 17) suggest foundational capabilities in understanding temporal sequences of images.

Long Context Evaluation

- Ablation studies focusing on RoPE rescaling (Figure 7) indicate successful generalization up to the target 128K context length for the pre-trained models, maintaining reasonable perplexity.

- Evaluations on dedicated long-context benchmarks like RULER and MRCR (Table 15) confirm the models’ ability to process and utilize long inputs. However, the observed performance degradation when moving from 32K to 128K contexts suggests that while the architecture supports the length, effectively performing complex reasoning over the entire span, as opposed to information retrieval or localized reasoning, remains challenging.

5. Ablation Studies Highlights

The report includes several informative ablations shedding light on the impact of specific design choices:

- Local:Global Attention Ratio: Varying the ratio of local to global layers (e.g., 3:1, 7:1 vs. the chosen 5:1) demonstrated minimal impact on model perplexity (Figure 3). This suggests robustness in the architecture and implies the 5:1 ratio was likely selected as an optimal point balancing KV cache savings and potential performance.

- Sliding Window Size: Reducing the window size for local attention layers significantly (down to 1024 tokens) incurred only a minor perplexity penalty (Figure 4). This finding is crucial, as it allows for substantial KV cache reduction without compromising core language modeling ability.

- KV Cache Memory Savings: The interleaved architecture provides dramatic memory savings during inference compared to a standard global-only attention model, especially at longer sequence lengths (Figures 5 & 6). This is the primary enabler for running Gemma 3 with 128K context on accessible hardware.

- Teacher Model Size in Distillation: An interesting finding (Figure 8) suggests that for longer training durations, distilling from a larger teacher model yields better student performance (lower perplexity). This contrasts with some findings from shorter-duration studies where smaller teachers can be optimal, highlighting the importance of training regime length in distillation dynamics.

6. Memorization and Privacy

Addressing memorization of training data is critical for responsible deployment, particularly for open models.

- Gemma 3 models demonstrate a marked reduction in both exact and approximate memorization rates compared to Gemma 2 and other previous models evaluated (Figure 9, note the log scale). This is a significant improvement from a privacy and intellectual property perspective.

- Memorization rates are lowest for the 1B model and increase slightly with model size. Approximate memorization (allowing for small edits) occurs roughly 24 times more frequently than exact verbatim memorization, on average across the models.

{

"data": [

{

"x": ["Gemma 3 1B", "Gemma 3 4B", "Gemma 3 12B", "Gemma 3 27B", "Gemma 2 2B", "Gemma 2 9B", "Gemma 2 27B"],

"y": [0.0002, 0.001, 0.0015, 0.002, 0.01, 0.05, 0.1],

"name": "Approx Memorization",

"type": "bar"

},

{

"x": ["Gemma 3 1B", "Gemma 3 4B", "Gemma 3 12B", "Gemma 3 27B", "Gemma 2 2B", "Gemma 2 9B", "Gemma 2 27B"],

"y": [0.00001, 0.00004, 0.00006, 0.00008, 0.001, 0.005, 0.01],

"name": "Exact Memorization",

"type": "bar"

}

],

"layout": {

"title": {

"text": "Memorization Rates (Log Scale, Illustrative Values)"

},

"yaxis": {

"title": "Rate (%)",

"type": "log",

"tickformat": ".5f"

},

"barmode": "group",

"legend": {"yanchor":"top", "y":0.99, "xanchor":"left", "x":0.01}

}

}

(Note: Y-axis values are illustrative, based on the visual trend and relative differences shown in Figure 9, as exact numbers aren’t provided in the text.)

- Importantly, using Google Cloud’s Sensitive Data Protection (SDP) tool, no personally identifiable information (PII) was detected within the model outputs identified as memorized content. This suggests that the PII filtering applied during data preparation was effective.

7. Responsibility, Safety, Security

DeepMind emphasizes a continued commitment to safety and responsibility, integrating processes throughout the development lifecycle.

- Governance and Assessment: The approach mirrors previous Gemma releases, balancing the benefits of open models with awareness of potential misuse. Risk assessments are conducted, considering the new multimodal and long-context capabilities.

- Safety Policies & Mitigations: Safety filtering is applied to pre-training data. Instruction tuning incorporates alignment with Google’s safety policies (covering areas like hate speech, harassment, dangerous content, child safety, non-consensual sexual content, and promoting illegal acts or severely harmful ideologies) using both supervised fine-tuning (SFT) and RLHF.

- Assurance Evaluations: Models undergo internal safety evaluations using adversarial prompts and human rating to assess policy violation rates, which are reported as low overall. Specific evaluations for knowledge related to Chemical, Biological, Radiological, and Nuclear (CBRN) threats indicate low capability in these high-risk domains.

- Responsible Open Models Approach: A system-level view is advocated, emphasizing that safety depends not just on the model but also on the application’s design and deployment environment.

8. Critical Analysis and Discussion

Gemma 3 undoubtedly pushes the state-of-the-art for open models in its parameter class, particularly regarding efficient multimodality and long context. However, a critical perspective reveals several nuances:

- The Role of Distillation: The remarkable performance gains, especially for the instruction-tuned models relative to their base counterparts and even Gemma 2, appear heavily reliant on knowledge distillation from highly capable (presumably proprietary, Gemini-family) teacher models. While effective, this underscores that achieving SOTA performance with Gemma 3 is not solely a function of its architecture or pre-training data, but significantly influenced by the quality of the teacher. The specifics of the “novel post-training recipe” remain opaque, limiting full replicability and understanding of the capability drivers.

- Defining “Long-Context Utilization”: The architecture successfully enables 128K context processing within reasonable memory constraints. However, performance metrics like perplexity and benchmark scores (e.g., the drop on MRCR from 32K to 128K) suggest a gap between processing long context and effectively reasoning over its entire span for complex tasks. The model might excel at retrieval or tasks where relevant information is localized, but deeper integration of information across the full 128K tokens might still be limited.

- Vision Component Trade-offs: Employing a frozen, relatively small (400M) vision encoder and condensing its output to 256 tokens prioritizes efficiency and simplifies training. However, this may inherently limit the richness of visual feature extraction and the potential for deep fusion between visual and language modalities compared to approaches with larger, jointly trained vision components. The P&S mechanism, while effective, introduces inference latency and complexity.

- Disentangling Contributing Factors: The report presents numerous improvements simultaneously (architecture, tokenizer, data mix, distillation, RL recipe). While ablations isolate some factors (like attention ratios), fully disentangling the precise contribution of each element (e.g., how much gain comes from the new tokenizer vs. the data mix vs. architectural tweaks alone) is difficult based on the provided results.

- Evaluation Considerations: While benchmark decontamination is performed, the possibility of subtle information leakage influencing performance on standard benchmarks always exists in LLM evaluation. Furthermore, the strong Chatbot Arena performance, while valuable, reflects preference on a specific platform and may not perfectly correlate with performance on all downstream tasks.

Conclusion

Gemma 3 stands as a robust and compelling addition to the open model landscape. Its intelligent architectural design, particularly the interleaved local/global attention, offers a pragmatic solution to the challenge of long-context inference on accessible hardware. The seamless integration of vision capabilities, enhanced by the practical P&S mechanism, broadens its applicability significantly.

Performance-wise, Gemma 3 models, especially the instruction-tuned variants, demonstrate impressive capabilities, often exceeding models with far larger parameter counts. This success appears heavily driven by sophisticated knowledge distillation and reinforcement learning strategies refined within Google’s broader AI research ecosystem. The significant reduction in training data memorization compared to prior models is a crucial step forward for privacy and responsible AI practices.

Despite reliance on undisclosed teacher models for peak performance and open questions regarding the depth of long-context reasoning, Gemma 3 offers a potent combination of performance, efficiency, multimodality, and improved safety characteristics. It provides a valuable asset for researchers and developers seeking powerful, openly available models capable of tackling a wider range of tasks than previous generations.

If you found this useful, please cite this as:

Goyal, Naman (Apr 2025). Gemma 3 Technical Deep Dive - Architecture, Performance, and Implications. https://namangoyal.com.

or as a BibTeX entry:

@article{goyal2025gemma-3-technical-deep-dive-architecture-performance-and-implications,

title = {Gemma 3 Technical Deep Dive - Architecture, Performance, and Implications},

author = {Goyal, Naman},

year = {2025},

month = {Apr},

url = {https://namangoyal.com/blog/2025/gemma3/}

}

References

-

-

- Extending context window of large language models via positional interpolationarXiv preprint arXiv:2306.15595, 2023

-

- Small-scale proxies for large-scale transformer training instabilitiesarXiv preprint arXiv:2309.14322, 2023

-

- Unimax: Fairer and more effective language sampling for large-scale multilingual pretrainingarXiv preprint arXiv:2310.17640, 2023

-

- Distilling the knowledge in a neural networkIn NIPS Deep Learning and Representation Learning Workshop, 2015

-

-

- WARP: On the Benefits of Weight Averaged Rewarded PoliciesarXiv preprint arXiv:2406.07874, 2024

- RLEF: Grounding Code LLMs in Execution Feedback with Reinforcement LearningarXiv preprint arXiv:2410.02089, 2024

- DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language ModelsarXiv preprint arXiv:2402.03300, 2024

- Tülu 3: Pushing frontiers in open language model post-trainingarXiv preprint arXiv:2411.15124, 2024

- Quantization and training of neural networks for efficient integer-arithmetic-only inferenceIn CVPR, 2018

Enjoy Reading This Article?

Here are some more articles you might like to read next: